Top Data Science Projects for Final year with source code

A data scientist needs to be a Jack of all trades but a master of some. Unless you are working for tech giants like Google or Facebook, you will not be working solely on modeling the data where you use data pulled by data engineers. Often many companies lack resources in data science teams so to deliver maximum benefit to the business you will have to work across the complete end-to-end data science product development life cycle. Working on end-to-end solved data science projects can make you win over this situation.

With IBM predicting 700,000 data science job openings by end of 2020, data science is — and always will be — the hottest career choice with demand for data specialists growing to grow progressively as the market expands. It takes an average of 60 days to fill an open data science position and 70 days on average to fill a senior data scientist position.

???? Let’s be friends! Follow me on Twitter and Facebook and connect with me on LinkedIn. You can visit My website Too . Don’t forget to follow me here on Medium as well for more technophile content.

Top Data Science Projects for Final Year

1) Build a Chatbot from Scratch in Python using NLTK

2) Churn Prediction in Telecom

3) Market Basket Analysis using Apriori

4) Build a Resume Parser using NLP -Spacy

5) Model Insurance Claim Severity

6)Sentiment Analysis of Product Reviews

7) Loan Default Prediction

8) Build an Image Classifier Using Tensorflow

9) PUBG Finish Placement Prediction

10) Price Recommendation using Machine Learning

11) Fraud Detection as a Classification Problem

12) Sales Forecasting

13) Building a Recommender System

14) Employee Access-Challenge as a Classification Problem

15) Survival Prediction using Machine Learning

1) Building a Chatbot with Python

Do you remember the last time you spoke to a customer service associate on call or via chat for an incorrect item delivered to you from Amazon, Flipkart, or Walmart? Most likely you would have had a conversation with a chatbot instead of a customer service agent. Gartner estimates that 85% of customer interactions will be handled by chatbots by 2022. So what exactly is a chatbot? How can you build an intelligent chatbot using Python?

What is a Chatbot?

A chatbot is an AI-based digital assistant that can understand human capabilities and simulate human conversations in natural language to give prompt answers to their questions just like a real human would. Chatbots help businesses increase their operational efficiency by automating customer requests.

How does a Chatbot work?

The most important task of a chatbot is to analyze and understand the intent of a customer request to extract relevant entities. The bot then delivers an appropriate response to the user based on the analysis. Natural language processing plays a vital role in text analytics through chatbots making the interaction between the computer and human feel like a real human conversation. Every chatbot works by adopting the following three classification methods-

Pattern Matching — Makes use of pattern matches to group the text and produce a response

Natural Language Understanding (NLU) — The process of converting textual information into a structured data format that a machine can understand.

Natural Language Generation (NLG) — The process of transforming structured data into text.

How to build your own chatbot?

In this data science project, you will use a leading and powerful Python library NLTK (Natural Language Toolkit) to work with text data. Import the required data science libraries and load the data. Use various pre-processing techniques like Tokenization and Lemmatization to pre-process the textual data. Create training and test data. Create a simple set of rules to train the chatbot.

2) Churn Prediction in Telecom Industry using Logistic Regression

According to EuropeanBusinessReview, telecommunication providers lose close to $65 million a month from customer churn. Isn’t that expensive? With many emerging telecom giants, the competition in the telecom sector is increasing and the chances of customers discontinuing a service are high. This is often referred to as Customer Churn in Telecom. Telecommunication providers that focus on quality service, lower-cost subscription plans, and availability of content and features whilst creating positive customer service experiences have high chances of customer retention. The good news is that all these factors can be measured with different layers of data about billing history, subscription plans, cost of content, network/bandwidth utilization, and more to get a 360-degree view of the customer. This 360-degree view of customer data can be leveraged for predictive analytics to identify patterns and various trends that influence customer satisfaction and help reduce churn in telecom.

Considering that customer churn in telecom is expensive and inevitable, leveraging analytics to understand the factors that influence customer attrition, identifying customers that are most likely to churn, and offering them discounts can be a great way to reduce it. In this data science project, you will build a logistic regression machine learning model to understand the correlation between the different variables in the dataset and customer churn.

3) Market Basket Analysis in Python using Apriori Algorithm

Whenever you visit a retail supermarket, you will find that baby diapers and wipes, bread and butter, pizza base and cheese, beer, and chips are positioned together in the store for sales. This is what market basket analysis is all about — analyzing the association among products bought together by customers. Market basket analysis is a versatile use case in the retail industry that helps cross-sell products in a physical outlet and also helps e-commerce businesses recommend products to customers based on product associations. Apriori and FP growth are the most popular machine learning algorithms used for association learning to perform market basket analysis.

4) Building a Resume Parser Using NLP(Spacy) and Machine Learning

A resume parser or a CV parser is a program that analyses and extracts CV/ Resume data according to the job description and returns machine-readable output that is suitable for storage, manipulation, and reporting by a computer. A resume parser stores the extracted information for each resume with a unique entry thereby helping recruiters get a list of relevant candidates for a specific search of keywords and phrases (skills). Resume parsers help recruiters set a specific criterion for a job, and candidate resumes that do not match the set criteria are filtered out automatically.

In this data science project, you will build an NLP algorithm that parses a resume and looks for the words (skills) mentioned in the job description. You will use the Phrase Matcher feature of the NLP library Spacy that does “word/phrase” matching for the resume documents. The resume parser then counts the occurrence of words (skills) under various categories for each resume that helps recruiters screen ideal candidates for a job.

5) Modelling Insurance Claim Severity

Filing insurance claims and dealing with all the paperwork with an insurance broker or an agent is something that nobody wants to drain their time and energy on. To make the insurance claims process hassle-free, insurance companies across the globe are leveraging data science and machine learning to make this claims service process easier. This beginner-level data science project is about how insurance companies are predictive machine learning models to enhance customer service and make the claims service process smoother and faster.

Whenever a person files an insurance claim, an insurance agent reviews all the paperwork thoroughly and then decides on the claim amount to be sanctioned. This entire paperwork process to predict the cost and severity of the claim is time-taking. In this project, you will build a machine learning model to predict the claim severity based on the input data.

This project will make use of the Allstate Claims dataset that consists of 116 categorical variables and 14 continuous features, with over 300,000 rows of masked and anonymous data where each row represents an insurance claim.

6) Pairwise Reviews Ranking- Sentiment Analysis of Product Reviews

Product reviews from users are the key for businesses to make strategic decisions as they give an in-depth understanding of what the users actually want for a better experience. Today, almost all businesses have reviews and rating sections on their website to understand if a user’s experience has been positive, negative, or neutral. With an overload of puzzling reviews and feedback on the product, it is not possible to read each of those reviews manually. Not only this, most of the time the feedback has many shorthand words and spelling mistakes that could be difficult to decipher. This is where sentiment analysis comes to the rescue.

7) Loan Default Prediction Project using Gradient Booster

Loans are the core revenue generators for banks as a major part of the profit for banks comes directly from the interest of these loans. However, the loan approval process is intensive with so much validation and verification based on multiple factors. And even after so much verification, banks still are not assured if a person will be able to repay the loan without any difficulties. Today, almost all banks use machine learning to automate the loan eligibility process in real-time based on various factors like Credit Score, Marital and Job Status, Gender, Existing Loans, Total Number of Dependents, Income, and Expenses, and others.

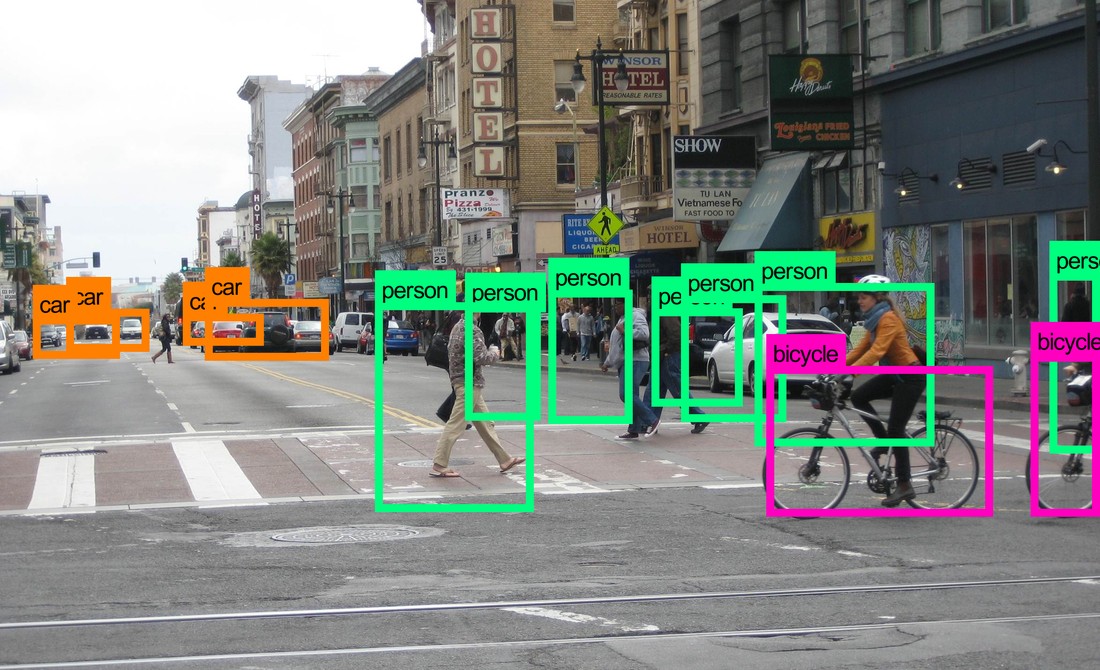

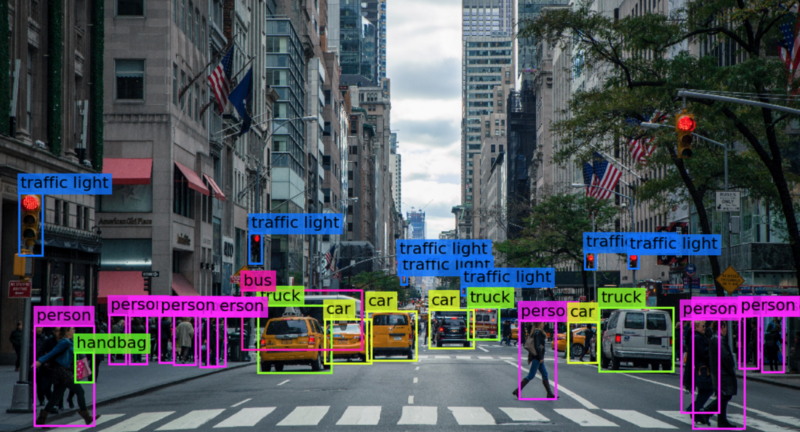

8) Plant Identification using TensorFlow (Image Classifier)

Image classification is a fantastic application of deep learning where the objective is to classify all the pixels of an image into one of the defined classes. Plant image identification using deep learning is one of the most promising solutions for bridging the gap between computer vision and botanical taxonomy. If you want to take your first step into the amazing world of computer vision, then this is definitely an interesting data science project idea to get started.

9) PUBG FINISH Placement Prediction

With millions of active players and over 50 million copies sold- Player Unknown’s Battlegrounds enjoys huge popularity across the globe and is among the top five best-selling games of all time. PUBG is a game where n different number of people play with n different strategies and predicting the finish placement is definitely a challenging task.

In this data science project, you will basically develop a winning formula i.e. build a model to predict the finishing placement of a player against without a player playing the game.

10) Price Recommendation for Online Sellers

e-commerce platforms today are extensively driven by machine learning algorithms, right from quality checking and inventory management to sales demographics and product recommendations, all use machine learning. One more interesting business use case that e-commerce apps and websites are trying to solve is to eliminate human interference in providing price suggestions to the sellers on their marketplace to speed up the efficiency of the shopping website or app. That’s when price recommendation using machine learning comes to play.

11) Credit Card Fraud Detection as a Classification Problem

This is an interesting data science problem for data scientists, who want to get out of their comfort zone by tackling classification problems by having a large imbalance in the size of the target groups. Credit Card Fraud Detection is usually viewed as a classification problem with the objective of classifying the transactions made on a particular credit card as fraudulent or legitimate. There are not enough credit card transaction datasets available for practice as banks do not want to reveal their customer data due to privacy concerns.

Problem Statement

This data science project aims to help data scientists develop an intelligent credit card fraud detection model for identifying fraudulent credit card transactions from highly imbalanced and anonymous credit card transactional datasets. To solve this project related to data science, the popular Kaggle dataset contains credit card transactions made in September 2013 by European cardholders. This credit card transactional dataset consists of 284,807 transactions of which 492 (0.172%) transactions were fraudulent. It is a highly unbalanced dataset as the positive class i.e. the number of frauds accounts only for 0.172% of all the credit card transactions in the dataset. There are 28 anonymized features in the dataset that are obtained by feature normalization using principal component analysis. There are two additional features in the dataset that have not been anonymized — the time when the transaction was made and the amount in dollars. This will help detect the overall cost of fraud.

12) Walmart Store’s Sales Forecasting

Ecommerce & Retail use big data and data science to optimize business processes and for profitable decision-making. Various tasks like predicting sales, offering product recommendations to customers, inventory management, etc. are elegantly managed with the use of data science techniques. Walmart has used data science techniques to make precise forecasts across their 11,500 generating revenue of $482.13 billion in 2016. As it is clear from the name of this data science project, you will work on a Walmart store dataset that consists of 143 weeks of transaction records of sales across 45 Walmart stores and their 99 departments.

Problem Statement

This is an interesting data science problem that involves forecasting future sales across various departments within different Walmart outlets. The challenging aspect of this data science project is to forecast the sales on 4 major holidays — Labor Day, Christmas, Thanksgiving and Super Bowl. The selected holiday markdown events are the ones when Walmart makes highest sales and by forecasting sales for these events they want to ensure that there is sufficient product supply to meet the demand. The dataset contains various details like markdown discounts, consumer price index, whether the week was a holiday, temperature, store size, store type and unemployment rate.

Objectives of the Data Science Project Using Walmart Dataset

Forecast Walmart store sales across various departments using the historical Walmart dataset.

Predict which departments are affected with the holiday markdown events and the extent of impact.

13) Building a Recommender System -Expedia Hotel Recommendations

Everybody wants their products to be personalized and behave the way they want them to be. A recommender system aims to model the preference of a product for a particular user. This data science project aims to study the Expedia Online Hotel Booking System by recommending hotels to users based on their preferences. Expedia dataset was made available as a data science challenge on Kaggle to contextualize customer data and predict the probability of a customer likely to stay at 100 different hotel groups.

Problem Statement

The Expedia dataset consists of 37,670,293 entries in the training set and 2,528,243 entries in the test set. Expedia Hotel Recommendations dataset has data from 2013 to 2014 as the training set and the data for 2015 as the test set. The dataset contains details about check-in and check-out dates, user location, destination details, origin-destination distance, and the actual bookings made. Also, it has 149 latent features which have been extracted from the hotel reviews provided by travelers that are dependent on hotel services like proximity to tourist attractions, cleanliness, laundry service, etc. All the user id’s that present in the test set is present in the training set.

14) Amazon- Employee Access Data Science Challenge

Employees might have to apply for various resources during their career at a company. Determining various resource access privileges for employees is a popular real-world data science challenge for many giant companies like Google and Amazon. For companies like Amazon because of their highly complicated employee and resource situations, earlier this was done by various human resource administrators. Amazon was interested in automating the process of providing access to various computer resources to its employees to save money and time.

Problem Statement

Amazon- Employee Access Data Science Challenge dataset consists of historical data of 2010 -2011 recorded by human resource administrators at Amazon Inc. The training set consists of 32769 samples and the test set consists of 58922 samples. Every dataset sample has eight features that indicate a different role or group of an Amazon employee.

Source code

15) Predict the Survival of Titanic Passengers — Would you survive the Titanic?

This is one of the popular projects related to data science in the global community for data science beginners because the solution to this data science problem provides a clear understanding of what a typical data science project consists of.

Problem Statement

This data science problem involves predicting the fate of passengers aboard the RMS Titanic that famously sank in the Atlantic Ocean on collision with an iceberg during its voyage from the UK to New York. The aim of this data science project is to predict which passengers would have survived on the Titanic based on their personal characteristics like age, sex, class of ticket, etc.

16) House Price Prediction using Machine Learning

If you think real estate is one such industry that has been alienated by Machine Learning, then we’d like to inform you that it is not the case. The industry has been using Machine learning algorithms for a long time and a popular example of this is the website Zillow. Zillow has a tool called Zestimate that estimates the price of a house on the basis of public data. If you’re a beginner, it’d be a good idea to include this project in your list of data science projects.

Problem Statement

In this data science project, the task is to implement a regression machine-learning algorithm for predicting the price of a house by using the Zillow Dataset. The dataset contains about 60 features and contains 2 files ‘train_2016’ and ‘properties_2016’. The files are linked through each other via a feature called ‘parcelid’.

17) Stock Market Prediction

“Our favorite holding period is forever.” — Warren Buffet

For most stock investors, the favorite question is “How long should we hold a stock for?”. Every investor wants to know how not to act too fearful and too greedy. And not all of them have Warren Buffet to guide them at every stem. We’d suggest that you stop looking for him. Rather, build your stock market predictor with artificial intelligence tools like Machine Learning. And the approach to this is so simple that you can consider adding this to your Data Science Projects list.

Problem Statement

Build a Stock Market Forecasting system by implementing Machine learning algorithms on the EuroStockMarket Dataset. The dataset has closing prices of major European Stock Indices: Germany DAX (Ibis), Switzerland SMI, France CAC, and UK FTSE, for all business days.

18) Wine Quality Prediction

On the weekend, most of us prefer having a fancy dinner with our loved ones. While the kids define a fancy dinner as one that has pasta, adults like to add a cherry on top by having a classic glass of red wine along with the Italian dish. But when it comes to shopping for that wine bottle, a few of us get confused about which is the best one to buy. Few believe that the longer it has been fermented, the better it’ll taste. Few suggest relatively sweeter wines are good quality wines. To know a precise answer, you can try building your wine Quality Predictor.

Problem Statement

Use the red wine dataset to build a Wine Quality Predictor System.

The objective of the Wine Quality Prediction Data Science Project

Analyze which chemical properties of a red wine influence its quality using a red wine dataset by Kaggle.

19) Macro-economic Trends Prediction

Often we hear from the news channels that XYZ country is going to be one of the biggest economies in the world in the year 2030. If you have ever wondered what is the basis for such statements, then allow me to help you. These news channels rely on statisticians-cum-Data Scientists to come up with such predictions. These data scientists analyze several financial datasets of various countries and then submit their conclusions which then make the headlines. Well, if you are interested in a project that revolves around this area, then you are at the right page.

Problem Statement

Design a macro-economic trends predictor using Machine Learning algorithms on a Financial Dataset from Kaggle.

20) Credit Analysis

Many multinational companies of the Banking sector have now started relying on Artificial intelligence techniques that allow them to classify loan applications. They request their customers to submit specific details about themselves.

They then utilize these details and implement machine learning algorithms on the collected data to understand the ability of their customers to repay the loan they have applied for. You can also attempt to build a project around this using the German Credit Dataset.

Problem Statement

Use the German Credit Dataset to classify loan applications. The dataset contains information of about 1,000 loan applicants. And for each applicant, we have 20 feature variables. Out of these 20 attributes, three can take continuous values, and the remaining seventeen can take discrete values.

21) Image Masking

Often we come across images from which we wish to remove background and utilize them for specific purposes. Carvana, an online start-up, has attempted to build an automated photo studio that clicks 16 photographs of each vehicle in its inventory. Cavana captures these photographs with bright reflects in high resolution. However, sometimes the cars in the background make it difficult for their customers to look at their choice vehicle closely. Thus, an automated tool that can remove background noise from the captured images and only highlight the image’s subject would work like magic for the startup and save tons of hours for their photo editors. You can also implement such an Image Masking system that automatically removes the background noise.

Problem Statement

Using the Carvana Dataset, implement a neural network algorithm to design an Image Masking system that removes photo studio background. This implementation will make it easy to prepare images containing backgrounds that bring the car features into the limelight.

22) Human Activity Recognition

Gone are the days where we would use an analogue watch to check the time. With exciting watches being designed by multiple international brands, people are now gradually switching to smartwatches. Smartwatches are cool watches of the 21st century that have made their way into almost every household. The prime reason for this is the attractive features that they offer. From heart-rate monitoring, ECG monitoring, to workout-tracking, they can do almost anything.

If you have used one such watch, you can recall that it often tells you how well you slept. So, how come a device that never sleeps can guide you about your sleep? To find an answer to this, you can do a simple data science project that associates a dataset of a few people’s daily activities with the data collected by various sensors attached to those people.

Problem Statement

In this data science project, you are expected to use machine-learning algorithms to assign the Human Activity Recognition Dataset features a class out of these six: WALKING, WALKING_UPSTAIRS WALKING_DOWNSTAIRS, SITTING, STANDING, LAYING.

23) Personalized Medicine Recommending System

The recent talk of the town among Cancer Researchers is how treating diseases like Cancer using Genetic Testing will be a revolution in the universe of Cancer Research. This dreamy revolution has been partially realized because of the significant efforts of clinical pathologists. The pathologist first sequences a cancer tumour gene and then figures out the interpretation of genetic mutations manually. This is quite a tedious process and takes a lot of time as the pathologist has to go look for evidence in clinical literature to derive interpretations. But, this process can be made smooth if we implement Machine Learning algorithms.

If you want to explore the field that integrates both Medicine and Artificial Intelligence, this project will be a good start in that direction.

Problem Statement

Automate the task of classifying every single genetic mutation of the cancer tumour using the dataset prepared by Memorial Sloan Kettering Cancer Center (MSKCC). The dataset contains mutations labelled as tumour growth (drivers) and neutral mutations (passengers). The dataset has been annotated by world-renowned researchers and oncologists manually.

24) Recommendation System for Retail Stores

In case you have tried shopping online, you must have seen the website trying to recommend you a few products. Have you ever wondered how such websites come up with products that you are highly likely to display interest in? Well, that’s because machine learning-based algorithms are running in the background, and this project is all about it.

The objective of the Recommendation System Data Science Project:

Work on the dataset of retail stores, build an efficient recommendation system for them and perform Market Basket Analysis.

Source code

???? Let’s be friends! Follow me on Twitter and Facebook and connect with me on LinkedIn. You can visit My website Too . Don’t forget to follow me here on Medium as well for more technophile content.

.jpg)